C# Tips for Compacting Code

This is a little series of things that I’ve picked up over the course of time and use in situations to make my code more compact, and, in my opinion readable. Your mileage may vary on liking any or all of these, but I figured I’d share them.

This is a little series of things that I’ve picked up over the course of time and use in situations to make my code more compact, and, in my opinion readable. Your mileage may vary on liking any or all of these, but I figured I’d share them.

To me, compacting code is very important. When I look at a method or a series of methods, I like to be able to tell what they do at a glance and drill into them only if it’s important to me. I think I naturally perceive code the way I do an outline in a Word document or something. I look on the left for brief, salient points, and then in the smaller text that goes further right only if I want to fill in the details. This allows me to variable skim over or delve into methods.

If methods are very vertically verbose, I lose this perspective. If I have two or even one method taking up all of the real estate in my IDE vertically, I don’t know what’s going on in the class because I’m lost in some confusing method that forces me to think about too many things at once. I don’t ever use that little drop down in Visual Studio with the alphabetized method list for navigation. If I have to use that to navigate, I consider the class a cohesion disaster.

So, given this line of thought, here are some little tips I have for making methods and code in general more compact without sacrificing (in my opinion) readability.

Null coalescing in foreach

Consider the following method:

public virtual void BindCommands(params ICommand[] commands)

{

if (commands == null)

return;

foreach (var myCommand in commands)

BindCommand(myCommand);

}

We’re going to take a collection of commands and iterate over them in this method, invoking an individual method to do the individual dirty work. So, the first thing that we do is guard against null so we’re not tripping over an exception. We could throw an exception on null argument, which might be preferable depending on context, but let’s forget about that possibility and assume that failing quietly is actually what we want here. Once we’ve finished with the early return bit, we do the actual, meaningful work of the method.

Let’s compact that a bit:

public virtual void BindCommands(params ICommand[] commands)

{

var myCommands = commands ?? new ICommand[] { };

foreach (var myCommand in myCommands)

BindCommand(myCommand);

}

Now, we’re using the null object pattern and null coalescing operator to take care of the null handling, instead of an early return with an ugly guard condition. We can compact this even more, if so desired:

public virtual void BindCommands(params ICommand[] commands)

{

foreach (var myCommand in commands ?? new ICommand[] { })

BindCommand(myCommand);

}

Now, we’ve eliminated the extra code altogether and gotten this method down to its real meat and potatoes — iterating over the collection of commands. The fact that a corner case in which this collection might be null exists is an annoying detail, and we’ve relegated it to such status by not devoting 50% of the method’s real estate to handling it. The syntax here may look a little funny at first if you aren’t used to it, but it’s not double-take inducing. We iterate over a collection and do something. The target of our iteration looks a little more involved, but in my opinion, this is a small price to pay for compacting the method and not devoting half of the method to a corner case.

Using params

Speaking of params, let’s use params! In the method above, let’s consider the code that I was replacing:

private void BindCommand(ICommand command)

{

if (command != null && _gesture != null)

_window.InputBindings.Add(new InputBinding(command, _gesture));

}

Client code of this then looks like:

private void SomeClient()

{

var myBinder = new Binder(SomeWindow, SomeKeyGesture);

myBinder.BindCommand(SomeCommand1);

myBinder.BindCommand(SomeCommand2);

myBinder.BindCommand(SomeCommand3);

//etc

}

As an aside, ignore the fact that it’s obtuse to bind a bunch of different commands to the same window and key gesture. In the actual code, there’s a lot more going on than I’m displaying here, and I’m trying to include nothing that will distract from my points. If you look at the params version above, consider what the client code of that looks like:

private void SomeClient()

{

var myBinder = new Binder(SomeWindow, SomeKeyGesture);

myBinder.BindCommands(SomeCommand1, SomeCommand2, SomeCommand3);

}

Now, I personally have a strong preference for that. A bunch of lines of the same thing over and over again drives me batty, even if the things in question need to be parameterized somewhere and this is the place it has to happen. There just seems something incredibly vacuous about code like the pre-example and I always favor more vertically compact code because I can process more of the details. By SomeCommand12, I’ve probably figured out what’s going on even on my slowest day — I don’t need another 50 lines besides. If we have to do things like this, let’s at least condense them so they take up as little mindshare in a method as possible.

Optional Parameters

If you haven’t gotten on board this train since C# 4.0, I’d say it’s time. If you have a bunch of code like this:

public void Method1()

{

Method4(null, null, null);

}

public void Method2(string arg1)

{

Method4(arg1, null, null);

}

public void Method3(string arg1, string arg2)

{

Method4(arg1, arg2, null);

}

public void Method4(string arg1, string arg2, string arg3)

{

Arg1 = arg1;

Arg2 = arg2;

Arg3 = arg3;

}

… it’s time to turn it into this:

public void TheOnlyMethod(string arg1 = null, string arg2 = null, string arg3 = null)

{

Arg1 = arg1;

Arg2 = arg2;

Arg3 = arg3;

}

Omit brackets when you have a single line following a branch or loop condition

I used to be a stickler for this:

if(child.SpareRod())

{

child.Spoil();

}

I reasoned that omitting the brackets was just begging for downstream maintenance problems, and that’d I’d be a good citizen, not taking shortcuts. I persisted in that way of doing things until quite recently. I was watching an Uncle Bob video where he said in passing, “I think that there should only be one line of code after an if or else or for, and I’m not going to make it easier on anyone that comes along to mess that up.” (can’t find the video, so I’m paraphrasing).

I blew this off at first, but for some reason, it stuck in my head and would occur to me from time to time. Finally, one day, I simply had a 180. I was now continuing to do it out of stubbornness, I realized, having long since decided Bob was right while barely realizing it. This practice made my code more compact, and it encouraged me to factor everything following a control flow statement into its own method, leading to much more readable code. I think the bigger benefit comes from the practice of “1 line per control flow statement” than the two lines you save from omitting the brackets, but nevertheless, both are adding up to create methods that are much more compact:

if(child.SpareRod())

child.Spoil();

So, I’m out of tips for the day. If people like this, let me know, and perhaps I’ll put together another little post with a few more compactness tips, though it might take me longer to think of ones. These were low hanging fruit that I find myself doing often.

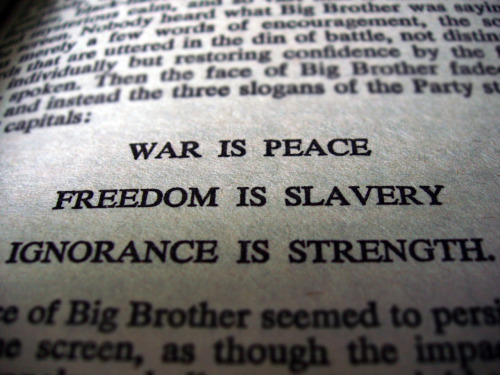

By the way, if you liked this post and you're new here, check out this page as a good place to start for more content that you might enjoy. My apologies for the titular pun in reference to “I Love Big Brother” of iconic, Orwellian fame, but I couldn’t resist. The other day, I was chatting with some people about the idea of factoring large methods into smaller, more focused ones and one of the people chimed in with an objection that was genuinely new to me.

My apologies for the titular pun in reference to “I Love Big Brother” of iconic, Orwellian fame, but I couldn’t resist. The other day, I was chatting with some people about the idea of factoring large methods into smaller, more focused ones and one of the people chimed in with an objection that was genuinely new to me.

If you combine small factored methods and unit tests (which tend to have a natural synergy), you will find that your debugger skills begin to atrophy. Rather than reasoning about the code at runtime, you reason about it at compile time. And, that’s a powerful and important concept.

If you combine small factored methods and unit tests (which tend to have a natural synergy), you will find that your debugger skills begin to atrophy. Rather than reasoning about the code at runtime, you reason about it at compile time. And, that’s a powerful and important concept.