The Outhouse Anti Pattern

Source Control Smells?

I was reading through some code the other day, and I came across a class that made me shutter a little. Interestingly, it wasn’t a code smell or a name smell. I wasn’t looking at the class itself, and it had an innocent enough name. It was a source control smell.

I was looking through source control for something unrelated, and for some reason this class caught my eye. I took a look at its version history and saw that it had been introduced and quickly changed dozens of times by several people. Even assuming that you like to check code in frequently, it’ll take you what, one or two checkins to write a class, and then maybe a few more to tweak its interface or optimize its internals, if need be? Why were there all these cooks in this kitchen, and what were they doing in there? The changes to the class continued like that over the course of time, with periods of few changes, and then bouts of frenzied, distributed activity. How strange.

I opened the class itself to take a look, and the source control checkin pattern immediately made sense to me. The class was a giant static class that did nothing but instantiate ICommand implementations and expose them as public fields. I haven’t tagged this class with C# for a reason — this anti-pattern transcends languages — so, I’ll briefly explain for non C# savvy readers. ICommand is an interface that defines Execute and CanExecute methods – sort of like a command pattern command, but without forcing implementation of UnExecute() or some such thing.

So, what was going on here was that this class was an already-large and robustly growing dumping repository for user actions in the application. Each property in the class was an instance of an ICommand implementation that allowed specifying delegates for the Execute and CanExecute methods, so they looked something like this:

public class ApplicationCommands

{

public static readonly ICommand FooComand = new Command(Execute, CanExecute); //Command accepts delegate xtor params

public static void Execute()

{

//Do some foo thing, perhaps with shared state (read: global variables) in the class

}

public static bool CanExecute()

{

//return a boolean based on some shared state (read: global variables) in the class

}

//Rinse, repeat, dozens and dozens, and probably eventually hundreds and thousands of times.

}

So, the ‘pattern’ here is that if you want to define some new user action, you open this class and find whatever shared global state will inform your command, and then add a new command instance field here.

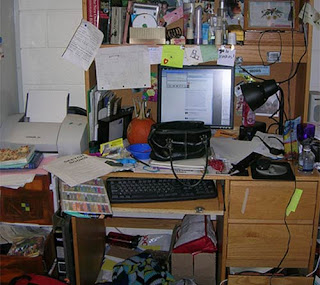

A Suitable Metaphor

I try to stay away from the vulgar in my posting, so I beg forgiveness in advance if I offend, but this reminds me most of an outhouse at a campground. It’s a very public place that a lot of people are forced to stop by and… do their business. The only real reason to use it is that a some people would probably be upset and offended if they caught you not using it (e.g. whoever wrote it), as there is no immediately obvious benefit provided by the outhouse beyond generally approved decorum. Like the outhouse, over time it tends to smell worse and worse and degenerate noticeably. If you’re at all sensitive to smells, you probably just go in, hold your nose, and get out as quickly as possible. And, like the high demand outhouse, there are definitely “merge conflicts” as people are not only forced to hold their noses, but to wait in line for the ‘opportunity’ to do so.

There are some periodic attempts to keep the outhouse clean, but this is very unpleasant for whoever is tasked with doing so. And, in spite of the good intentions of this person, the smell doesn’t exactly go away. Basic etiquette emerges in the context of futile attempts to mitigate the unpleasantness, such as signs encouraging people to close the lid or comments in the code asking people to alphabetize, but people are generally in such a hurry to escape that these go largely unheeded.

At the end of the day, the only thing that’s going to stop the area from being a degenerative cesspool is the removal of the outhouse altogether.

Jeez, What’s So Bad About This

The actual equivalence of my metaphor is exaggerating my tone a bit, but I certainly think of this as an anti-pattern. Here’s why.

- What is this class’s single responsibility? The Single Responsibility Principle (SRP) wisely advises that a class should have exactly one reason to change. This class has as many reasons as it has commands. And, you don’t get to cop out by claiming that its single responsibility is to define and store information about everything a user might ever want to do (see God Class).

- This class is designed to be modified each and every time the GUI changes. That is, this thing will certainly be touched with every release, and it’ll probably be touched with every patch. The pattern here is “new functionality — remember to modify ApplicationCommands.cs”. Not only is that a gratuitous violation of (even complete reversal of) the Open/Closed Principle, but if you add a few more outhouses to the code base, people won’t even be able to keep track of all the changes that are needed to alter some small functionality of the software.

- Dependency inversion is clearly discouraged. While someone could always access the static property and inject it into another class, the entire purpose of making them public fields in a static class is to encourage inline use of them as global ‘constants’, from anywhere. Client code is not marshaling all of these at startup and passing them around, but rather simply using them in ad-hoc fashion wherever they are needed.

- Besides violating S, O, and D of SOLID, what if I want a different command depending on who is logged in? For example, let’s say that there is some Exit command that exits the application. Perhaps I want Exit command to exit if read-only user is logged in, but prompt with “are you sure” for read-write user. Because of the static nature of the property accessors here, I have to define two separate commands and switch over them depending on which user is logged in. Imagine how nasty this gets if there are many kinds of users with the need for many different commands. The rate of bloat of this class just skyrocketed from linear with functionality to proportional to (F*R*N) where F is functionality items, R is number of roles and N is nuance of behavior in roles.

- And, of course, there is the logistical problem that I noticed in the first place. This class is designed to force multiple developers to change it, often simultaneously. That’s a lot like designing a traffic light that’s sometimes green for everyone.

And, the rate of bloat of the client classes just went up a lot too, as they’ll need to figure out who is logged in and thus which of the commands to request.

Alternative Design

So, how to work toward a more SOLID, flexible design? For a first step, I would make this an instance class, and hide the delegate methods. This would force clients to be decoupled from the definition, except in one main place. The second step would be to factor the commands with the more complex delegates into their own implementations of ICommand and thus their own classes. For simpler classes, these could simply be instantiated inline with the delegate methods collapsed to lambda expressions. Once everything had been factored into a dedicated class or flagged as declarable inline, the logic for generating these things could be moved into some sort of instance factory class that would create the various kinds of command. Now, we’re getting somewhere. The command classes have one reason to change (the command changes) and the factory has one reason to change (the logic for mapping requests to command instances changes). So, we’re looking better on SRP. The Open/Closed principle is looking more attainable too since the only class that won’t be closed for modification is the factory class itself as new functionality is added. Dependency inversion is looking up too, since we’re now invoking a factory that provides ICommand instances, so clients are not going out and searching for specific concrete instances on their own.

The one wrinkle is the shared global state from the previous commands static class. This would have to be passed into the factory and injected into the commands, as needed. If that starts to look daunting, don’t be discouraged. Sure, passing 20 or 30 classes into your factory looks really ugly, but the ugliness was there all along — you were just hiding it in the outhouse. Now that the outhouse is gone, you’ve got a bit of work to do shoveling the… you get the idea. Point is, at least you’re aware of these dependencies now and can create a strategy for managing them and getting them into your commands.

If necessary, this can be taken even further. To satisfy complaint (4), if that is really an issue, the abstract factory can be used. Alternatively, one could use reflection to create a store of all instances of ICommand in the application, keyed by their names. This is a convention over configuration solution that would allow clients to access commands by knowing only their names at runtime, and not anything about them beyond their interface definition. In this scheme, Open/Closed is followed religiously as no factory exists to modify with each new command. One can add functionality by changing a line of markup in the GUI and adding a new class. (I would advise against this strategy for the role/nuance based command scheme). Another alternative would be to read the commands in from meta-data, depending on the simplicity or complexity of the command semantics and how easily stored as data they could be. This is, perhaps the most flexible solution of all, though it may be too flexible and problematic if the commands are somewhat involved.

These are just ideas for directions of solution. There is no magic bullet. I’d say the important thing to do, in general, is recognize when you find yourself building an outhouse, and be aware of what problems it’s going to cause you. From there, my suggestions are all well and good in the abstract, but it will be up to you to determine how to proceed in transitioning to a better design.

There seem to be a few different themes here. The first one I see is one with which I have struggled myself in the past: chafing at being forced to change the way you do things to conform to a group convention. I touched on that

There seem to be a few different themes here. The first one I see is one with which I have struggled myself in the past: chafing at being forced to change the way you do things to conform to a group convention. I touched on that

In light of this development, writing code that is hard to maintain and declaring that this is just how you do things is a lot like writing a piece of code with an awful user interface and saying to your users “what do you want from me — it works, doesn’t it?” You’re correct, and you’re destined to go out of business. If I have a programmer on my team who consistently and proudly writes code that only he understands and only he can decipher, I’m hoping that he’s on another team as soon as possible. Because the fact of the matter is that anybody can write code that meets the requirements, but only a creative, intelligent person can do it in such a way that it’s quickly understood by others without compromising the correctness and performance of the solution.

In light of this development, writing code that is hard to maintain and declaring that this is just how you do things is a lot like writing a piece of code with an awful user interface and saying to your users “what do you want from me — it works, doesn’t it?” You’re correct, and you’re destined to go out of business. If I have a programmer on my team who consistently and proudly writes code that only he understands and only he can decipher, I’m hoping that he’s on another team as soon as possible. Because the fact of the matter is that anybody can write code that meets the requirements, but only a creative, intelligent person can do it in such a way that it’s quickly understood by others without compromising the correctness and performance of the solution. What do they mean that cleverness is bad, anyway? Why say something like that? The aforementioned Bob Martin, in a video presentation I once watched, said something like “you know you’re looking at good code when someone reading it is saying ‘uh-huh’, ‘uh-huh’, ‘yep’, ‘makes sense’, ‘uh-huh'”. Contrast this with code that you see where your first reaction is “What on Earth…?!?” That is often the reaction to non-functional or incorrect code, but it is just as frequently the reaction to ‘clever’ code.

What do they mean that cleverness is bad, anyway? Why say something like that? The aforementioned Bob Martin, in a video presentation I once watched, said something like “you know you’re looking at good code when someone reading it is saying ‘uh-huh’, ‘uh-huh’, ‘yep’, ‘makes sense’, ‘uh-huh'”. Contrast this with code that you see where your first reaction is “What on Earth…?!?” That is often the reaction to non-functional or incorrect code, but it is just as frequently the reaction to ‘clever’ code.