Generative AI and the Bullshit Singularity

I haven’t forgotten about my promise to discuss the concept of facadeware. The slings and arrows of outrageous fortune continue their assault on me as I navigate, among other things, two relocations in two months. I want to write the series, and I plan to write the series, but I’ve been busy. Nonetheless, thanks to those who read the first installment and the smattering of donations that were eventually refunded.

Anyway, as I thought about how to continue that series, I realized that I’d have to talk about generative AI. In the year of our Lord 2025, if I were to avoid talking about GenAI for as long as 15 minutes, I’m pretty some kind of Harrison-Bergeron-universe agent would break into my house and electrocute me.

Generative AI Isn’t Facadeware

First, let me say that what I’m describing as facadeware predates generative AI’s explosion onto the mainstream in 2022. I also don’t think GenAI is an example of facadeware. At least, not exactly.

In the previous post, I briefly defined facadeware as “superficially advanced gadgetry that actually has a net negative value proposition.” And while GenAI clearly has a (to date and for the foreseeable future) net-negative value proposition, I wouldn’t categorize it as superficially advanced. It is genuinely advanced, and it is an impressive feat of experimentation and human ingenuity.

And so because of this, GenAI/LLM techs don’t really have a place in the facadeware series (though I think the concept of “agentic AI” does qualify). However, I want to dump my bucket on this subject both because I know people will invariably bring it up for discussion and because I think a relationship, if not direct, does exist.

The Role of Bullshit in GenAI and Facadeware

Bullshit, as a concept, plays a foundational but different role in both GenAI and in facadeware. The role of bullshit in facadeware is relatively simple. To sell anything with a net-negative value proposition, almost by definition, requires bullshit. Bullshit is the fuel of the facadeware engine.

GenAI kind of inverts this. With GenAI, the fuel of the engine is human ingenuity, and the output is bullshit. In other words, some of the best and brightest minds in all of enterprise Silicon Valley have produced a technological advancement that is to bullshit what cold fusion would be to energy output. It was an improbable and unexpected giant leap forward in humanity’s collective capacity to generate bullshit.

So if you were to look for a relationship between facadeware and GenAI, the most likely scenario is that you would either use GenAI to generate facadeware or simply to market it.

Defining Bullshit Somewhat Rigorously

Now, before I go and make you think this is a simple exercise in Luddite shitposting, let me be clear that I actually have nothing against bullshit in moderation. Anything you do on social media is more or less bullshit, and plenty of self-soothing and self-indulgent narratives, like schadenfreude fantasies, are bullshit.

Now, let’s actually define bullshit with some precision before I lumber onwards with this rant. The dictionary in Google give us a short, sweet take:

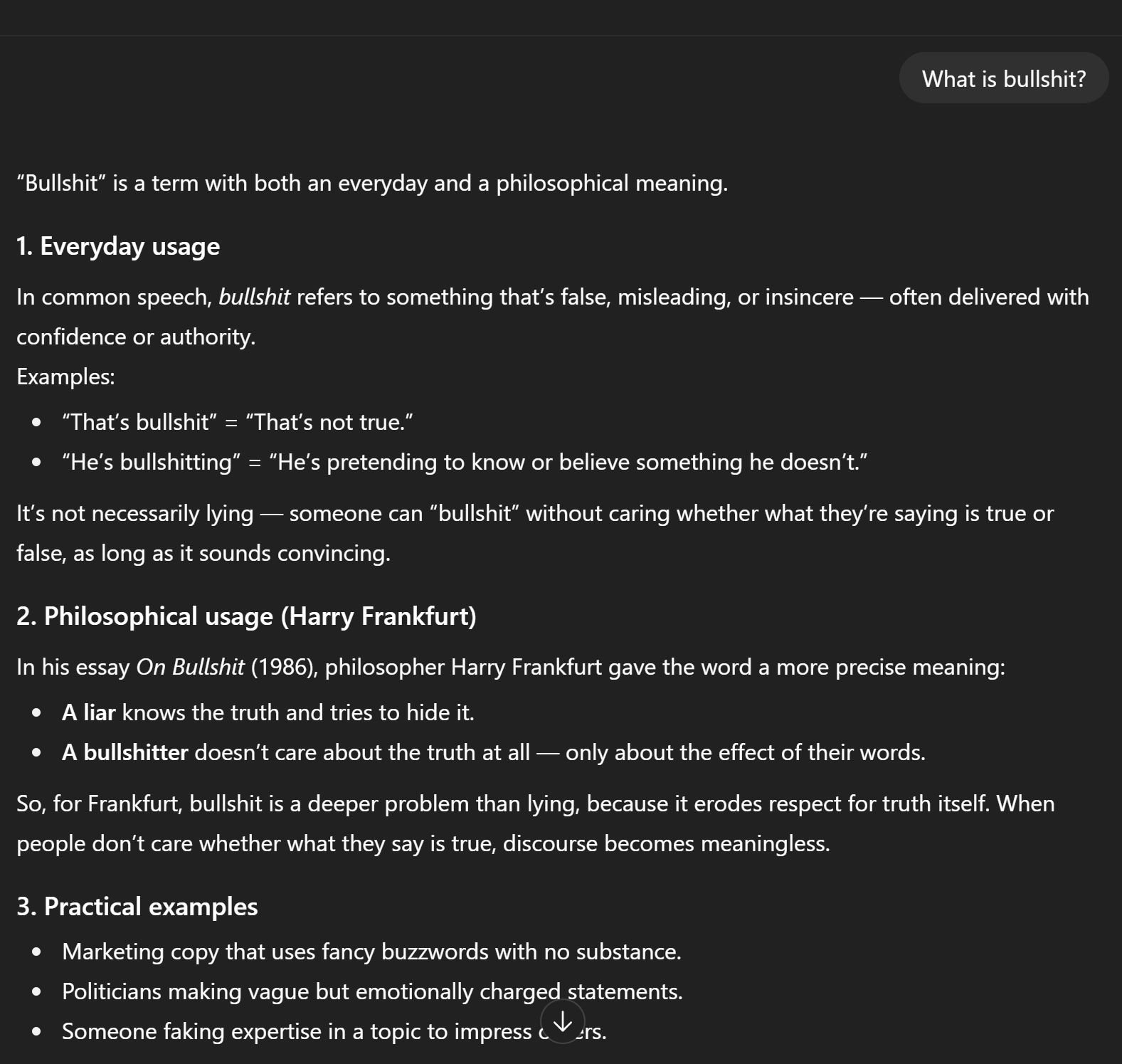

But let’s get meta-weird and ask ChatGPT, just for grins.

This is a particularly choice question and answer for a technology that can pass a Turing Test, but without a whiff of meaningful self-awareness. Let’s zoom in on point 2 here, Frankfurt’s explanation that “a bullshitter doesn’t care about the truth at all—only about the effect of their words.”

So you can see here that I’m not shitposting in the slightest. Frankfurt’s concept of a bullshitter is the core interaction principle of LLMs with humans: they predict the next word that will have the most positive effect on the user, without any care or even concept of truth. Using Frankfurt’s definition, ChatGPT and its ilk are literal bullshit manufacturing machines—bullshit dynamos, if you will.

Scratching the Consumer Bullshit Itch

Once you understand this, the resonance of the term “AI slop” makes sense. It’s an efficiently derisive way to categorize content and output meant only to manipulate the consumer and without regard for truth or veracity.

But again, I don’t mean this entirely negatively, though it has extremely obvious downsides. For some reason, this week, social media channels have been showing me meme videos of old men skiing with walkers and Stephen Hawking navigating skate park obstacles with his wheelchair. The first one or two of those I saw made me think, “I guess that’s kinda funny, in an unexpected way.”

And what is social media, if not voluntary bullshit consumption? You’ve got three minutes to kill while waiting at the dentist, so you pop open Facebook and think to yourself, “Alright, show me some bullshit.” And GenAI techs scratch this itch with remarkable efficiency.

But you can go a lot deeper with that. As I understand it, people are building weird relationships with ChatGPT. And why not? If your significant other has just unceremoniously dumped you, I have no doubt that you can get a sympathetic ChatGPT to comfort you with bullshit about how you’re better off, they’re worse off, and they’ll probably spend the rest of their life regretting the choice.

We opt into bullshit all the time. It’s an interesting part of our social fabric. And we now have an endless supply, on demand.

Bullshit’s Role in the Corporate World

Speaking of bullshit, anyone who has followed this column for long enough knows that I’m not a fan of the job interview as an institution. For more than a decade, I’ve advocated that we simply, collectively, stop doing job interviews. Why? Well, among other reasons, they’re a master class in bullshit.

Two parties talk at each other, each presenting a distorted, unrealistic picture of themselves that ranges from “generous” to “unmoored from reality.” And remember, if you’re indifferent to or unaware of the actual truth, then you’re in bullshit land and technically not lying.

This is one of the best use cases I can possibly imagine for a bullshit machine. You can have the bullshit machine help you come up with some bullshit about you, summarize the bullshit from the other party, and ideally start delegating the bullshit interactions to the technology itself. The logical outcome of the job interview as an institution, in my mind, is two chatbots yammering nonsense at each other. It has the same amount of value, but without wasting either party’s time.

But even beyond my cynical take, or the similarly cynical take behind Bullshit Jobs, bullshit has a storied role in the workplace, and a bullshit machine is a godsend for people:

- “Ugh, I don’t want to write a status report about what I’ve done this week. ChatGPT, write some bullshit up for me.”

- “I don’t want to read all that. ChatGPT, summarize this bullshit for me.”

- “I don’t really care what this says. Write some bullshit for my mailing list.”

- “Seriously, another forms over data app. Write this bullshit boilerplate for me.”

- “Look, there’s no way I’m spending time documenting this. Just write some bullshit for me.”

But What About Legitimate Use Cases?

Let me pump the brakes a bit here and address the nuance of use cases of GenAI that don’t adopt a fundamentally nihilistic attitude towards other parties. Let’s call them good faith use cases, for lack of a better term off the cuff. I’ll share some things that I use GenAI for (and I do use it, daily and heavily, so believe me that this post is an exercise in taxonomy, not a call to action).

- Reviewing contracts and other agreements that I probably wouldn’t bother to review otherwise.

- Brainstorming, especially if I already have a few ideas and want a few more.

- Context-dependent information synthesis, such as tooling recommendations or specific troubleshooting advice.

- Describing queries/scripts/methods/etc that I want and starting from “mostly coded” rather than scratch.

When using the techs in these contexts and plenty of others, I am categorically not seeking or interested in bullshit. In fact, I’d prefer ironclad facts, analysis, and diligence. But be that as it may, I will receive bullshit in response to these requests, and it will be up to me to separate truth (maybe 85%) from untruth (maybe 15%) in the results. And these use cases each have a built-in bullshit quality control process. Specifically and in order:

- I read the sections of the contracts it highlights and decide if its concerns are valid or not.

- With the ideas it gives me, I scan and decide if they’re stupid or not.

- With tool recommendations, I do separate diligence, and with troubleshooting, I routinely give it screenshots to show where it got off the rails making things up.

- I execute the code immediately to validate it and then sanity check it for code quality.

Nothing but bullshit ever comes out of these things, by definition and their fundamental nature. It’s just that some use cases are bullshit-tolerant. (And the ones from earlier are bullshit-forward.)

Framing LLM Requests: “Give Me Some Bullshit That…”

What comes out of this is something that I’ve found extremely helpful when using the tools. Whenever I’m typing context and asking for information, summaries, code, or prose, I mentally tee it up as “{context}, now give me your bullshit.”

This isn’t some kind of Gen-X-er cynicism. Rather, it’s to keep my expectations and sense of trust grounded in the face of a confident, articulate sycophant aiming to please me at all costs.

If it confidently tells me to eat rocks, I can laugh at it. But if it confidently tells me how an offer of judgment works in Michigan civil court cases, it’s important to remember that its authoritative, impressive-sounding answer is bullshit that stands a decent chance of being mostly true.

And this dynamic isn’t going anywhere or likely to improve much. So-called “hallucinations” (read: bullshit) are a fundamental characteristic of this technology. And given that these models are increasingly learning from their own tidal wave of bullshit, I think I’ll need my wits about me.

In a sense, it feels as though we’re barreling toward some kind of bullshit singularity as these things spew more and more content out into the world. Perhaps it’s a fitting development to accompany the rise of social media and the fact that we seem to live in a post-truth, choose-your-own-facts kind of world.

Tying Back to Facadeware

And that’s really all I have to say about LLM technologies in the context of facadeware, or really in general. They’re powerful, useful, frustrating, ubiquitous, and increasingly disappointing as we plummet toward the trough of disillusionment—but they’re also always interesting. I use them and have come to find them indispensable. I’m also eyes-wide open about what they are: bullshit machines with a hunger for energy that will eventually require a Dyson Sphere to power.

But whatever else they are, they’re not facadeware. Their bullshit will absolutely be used in service of selling facadeware. And the general rising tide of bullshit in the world will certainly exacerbate the facadeware problem. But apart from perhaps agentic AI implementations, I won’t be talking about them any further as I lay out my facadeware thesis.